SAP HANA support for HPE nPar on Superdome Flex update

In addition to the outstanding support for virtualization technologies like PowerVM for HANA and the lukewarm support for VMware by SAP, SAP also supports other technologies that allow larger systems to be subdivided into smaller nodes. Note that I did not say virtualization, but subdivision. Physical partitioning (PPAR) is a technology invented in the 1990s and only allows components, e.g. boards or NUMA nodes, to be allocated to a separate workload from others on the same physical system.

On October 22, 2018, SAP updated its SAP Note for HPE nPar technology.[i] With this update, SAP now supports nPars with Superdome Flex. Granularity is incredibly fine (not). As noted in the SAP note, “Via nPartitions, the following partition sizes are supported in terms of the number of sockets:

-

- Skylake based architecture: ScaleUp 16s, 12s, 8s, 4s; ScaleOut 4s, 8s, 16s”

Or to put it in terms of cores, each socket has 28 cores, so granularity is 112 cores. You need only 20 cores? No problem, you get to consume 112. You need 113 cores? Also no problem, you get to consume 224 cores. But, on the positive side, these npars are “electrically isolated” which has 2 really important implications. First, the only way to isolate one or more Superdome Flex drawers into a separate nPar is to physically change the mesh wiring of the entire system. That means that if you decide to change the configuration of nPars, dynamic changes would be the exact opposite of what is supported. In fact, according to customer reports, HPE requires a Statement of Work service contract to come out and rewire the system and it takes multiple days … one customer reported multiple weeks. The second implication is that all resources on the node(s) in an nPar are dedicated to that nPar. In the above example, if you need 20 cores, you probably require around a ½ TB of memory for BW or 1TB of memory for S/4. It is possible to configure an nPar with as little as 1.5TB of memory which means that you might waste an entire TB if you only need ½ TB. Alternately, if you have other workloads on other nPars that require more cores and memory and you want to keep all drawers consistent to allow for future changes, you might actually have up to 6TB per drawer meaning much more wasted memory if you only require ½ TB for a particular workload. By the way, the only other elements that are shared when a system is broken up into physically isolated nPars are the frame(s), power supplies and the RMC – Rack Management Controller. PCIe cards cannot be shared due to the physical isolation, so by using nPars, you essentially take a very expensive system and carve it into a bunch of smaller and very expensive, isolated systems which are difficult to reconfigure. Alternately, if you really must use HPE technology for smaller workloads, you could purchase smaller systems at much lower prices.

I have really been trying to scratch my head and understand why anyone would want this type of 1990s era partitioning technology. HPE certainly does because it results in higher profits from selling larger systems with more aggregate capacity while giving the false appearance of flexibility. For customers, on the other hand, it offers massive waste and very limited flexibility.

My advice: Don’t be a sucker and get taken in by HPE’s misdirection play. Either purchase appropriately sized systems for each workload or purchase systems that offer real virtualization, such as IBM Power Systems, with fine grained allocation of resources sharing of components such as PCIe adapters and true server consolidation, but don’t purchase one of these massive HPE systems and then eliminate any perceived value of using such a large system by cutting it up into smaller systems.

[i]2103848 – SAP HANA on HPE nPartitions in production

SAP increases support for HANA on Power, now up to 16 concurrent production VMs with IBM PowerVM

On March 1, 2019, SAP updated SAP Note 2230704 – SAP HANA on IBM Power Systems with multiple – LPARs per physical host. Previously, up to 8 concurrent HANA production VMs could be supported on the Power E880 system with 16 sockets. Now, the new POWER9 based E980, also with 16 sockets, is supported with up to 16 concurrent HANA production VMs. As was the case prior to this update, each VM must have a minimum of 4 cores and 128GB and can grow to as large as 16TB for OLAP and 24TB for OLTP. The maximum VM count may be reduced by 1 if a shared pool is desired for one or more non-production HANA, any other SAP or non-SAP workloads. There is no restriction on the number of VMs that can run in a shared pool from an SAP perspective, but practical physical limits are usually hit before any PowerVM architectural limits. CPU capacity not used by the production VMs may be shared, temporarily, with VMs in the shared pool using a proprietary technology called dedicated-donating where the production VM, which owns the CPU capacity, may loan part of it to the shared pool and get it back immediately when needed for that production workload.

Most customers were quite happy with 8 concurrent VMs, so why should anyone care about 16? Turns out, some customers have really complex landscapes. I recently had discussions with a customer that has around 12 current and planned production HANA instances. They were debating whether to use HANA in a multi-tenant configuration. The problem is that all HANA tenants in a mult-tenant VM are tightly bound, i.e. when the VM, OS or SAP software needs to be updated or reconfigured, all tenants are affected simultaneously. While not impossible to deal with, this introduces operational complexity. If those same 12 instances were placed on a new POWER server, the operational complexity could be eliminated.

As much as this might benefit the edge customers with a large number of instances, it will really benefit cloud vendors that utilize Power with greater flexibility, more sharing of resources and lower management and infrastructure costs. Also, isolation between cloud clients are essential, so multi-tenancy is rarely an effective option. On the other hand, PowerVM offers very strong isolation, so this offers an excellent option for cloud providers even when different clients share the same infrastructure.

This announcement also closes a perceived gap where VMware could already run up to 16 concurrent VMs on an 8-socket system. The caveat to that support was that the minimum and maximum size of each VM when running at the 16 VM level was ½ socket. Of course, you could play the mix and match game with some VMs at the ½ socket level and others at the full or multi-socket level, but neither of these options provides for very good granularity. For systems with 28-core sockets, the granularity per VM is 14 cores, 28 cores and then multiples of 28 cores up to 112 cores. For those that are configured at 1/2 sockets, if there is no other workload to consume the other 1/2 socket, then the capacity is simply wasted. Memory, likewise, has some granularity limitations. According to VMware’s Best Practice Guide, “When an SAP HANA VM needs to be larger than a single NUMA node, allocate all resource of this NUMA node; and to maintain a high CPU cache hit ratio, never share a NUMA node for production use cases with other workloads. Also, avoid allocation of more memory than a single NUMA node has connected to it because this would force it to access memory that is not local to the SAP HANA processes scheduled on the NUMA node.” In other words, any memory not consumed by the HANA VM(s) on a particular socket/node is simply wasted since other nodes should not utilize memory across nodes.

By comparison, production HANA workloads running on PowerVM may be adjusted by 1 core at a time with memory granularity measured in MB, not GB or TB.

In an upcoming blog post, I will give some practical examples of landscapes and how PowerVM and VMware virtualization would utilize resources.

With this enhanced level of support from SAP, IBM Power Systems with PowerVM is once again the clear leader in terms of virtualization for HANA environments.

VMware pushes past 4TB SAP HANA limit

Excuse me while I yawn. Why such a lack of enthusiasm you might ask? Well, it is hard to know where to start. First, the “new” limit of 6TB is only applicable to transactional workloads like SoH and S/4HANA. BW workloads can now scale to an amazing size of, wait for it, 3TB. And granularity is still largely at the socket level. Let’s dissect this a bit.

VMware VMs with 6TB for OLTP/3TB for OLAP is just slightly behind IBM Power Systems’ current limitation of 24TB OLTP/16TB OLAP by a factor or 4 or more. (yes, I can divide 16/3 = 5.3)

But kudos to VMware. At least now customers with over 4TB but under 6TB requirements (including 3 to 5 years growth of course) can now consider VMware, right?

With this latest announcement (not really an announcement, a SAP note, but that is pretty typical for SAP) VMware is now supported as a “real” virtualization solution for HANA. Oh, how much they wish that was true. VMware is now supported on two levels: min and max size of “shared” socket at ½ of the socket’s capacity or min of 1 dedicated socket/max of 4 sockets with a granularity of 1 socket. At a ½ socket, the socket may be shared with another HANA production workload but SAP suggests an overhead of 14% in this scenario and it is not clear if they mean 14% total or 14% in addition to the 10% when using VMware in the first place. Even at this level, the theoretical capacity is so small as to be of interest for only the very smallest demands. At the dedicated socket level, VMware has achieved a fundamental breakthrough … physical partitioning. Let me reach back through the way-back machine … way, way back … to the 1990s, (Oh, you Millennials, you have no idea how primitive life used to be) when some of us used to subdivide systems along infrastructure boundaries and thought we were doing something really special (HP figured out how to do this about 5 years later (and are still doing this today), but lets give them credit for trying and not criticize them for being a little slow … after all, not everyone can be a C student).

So, now, almost 30 years later, VMware is able to partition on physical boundaries of a socket for production HANA workloads. That is so cool that if any of you are similarly impressed, I have this incredibly sophisticated device that will calculate a standard deviation with the press of a button (yes, Millennials, we used to have specialized tools to help us add and subtract called calculators which were a massive improvement over slide rules, so back off! What, you have never heard of a slide rule … OMG!)

A little 101 of Power Systems for HANA: PowerVM (IBM’s hardware/firmware virtualization manager) can subdivide a physical system at a logical level of a core, not a physical socket, for HANA production workloads. You can also add (or subtract) capacity by increments of 1 core for HANA production, increments of 1/20thof a core for non-prod and other workloads. You can even add memory without cores even if that memory is not physically attached to the socket on which that core resides. But, there is more. During this special offer, only if you call in the next 1,000,000 minutes, at no extra charge, PowerVM will throw in the ability to move workloads and/or memory around as needed within a system or to another system in its cluster and share capacity unused by production workloads with a shared pool of VMs for application servers, non-prod or even host a variety of non-HANA, non-SAP, Linux, AIX or IBM I workloads on the same physical system with up to 64TB of memory shared amongst all workloads on a logical basis.

Shall we dive a little deeper into SAP’s support for HANA on VMware … I thing we shall! So, we are all giddy about hosting a 6TB S/4 instance on VMware. The HANA appliance specs for a 6TB instance on Skylake are 4 sockets @ 28 core/socket with hyperthreading enabled. A VMware 6.7 VM is supported with up to 128 virtual processors (vps). 4 x 28 x 2 = 224 vps

I know, we have a small problem with math here. 128 is less than 224 which means the math must be wrong … or you can’t use all of the cores with VMware. To be precise, with hyperthreading enabled you can only use 16 cores per socket or 2/3 of the cores used in the certified appliance test. And, that is before you consider the minimum 10% overhead noted in the SAP note. So, we are asked to believe that 128 * .9 = 115vps will perform as well as 224vps. As a career long salesman from Louisiana, I have to say, please call me because I know where I can get ahold of some prime real estate in the Atchafalya Basin (you can look it up but it is basically swamp land) to sell to you for a really good price.

Alternately, we can disable hyperthreading and use all of 4 sockets for a single HANA DB workload. Once again, the math gets in the way. VMware estimates hyperthreading increases core throughput by about 15%, so logically, removing hyperthreading has the opposite effect, and once again, that 10% overhead still comes into play meaning even more performance degradation: .85 * .9 = 77% of the certified appliance capacity.

By the way, brand new in this updated SAP note is a security warning when using VMware. I have to admit a certain amount of surprise here as I believe this is the first time that using a virtualization solution on Intel systems is recognized by SAP as coming with some degree of risk … despite the National Vulnerabilities Database showing 1009 hits when searching on VMware. A similar search on PowerVM returns 0 results.

This new announcement does little to change the playing field. Intel based solutions using VMware to host product HANA environments will deliver stunted physically partitioned systems with almost no sharing of resources other than perhaps some I/O and a nice hefty bill for software and maintenance from VMware. In other words, as before, most customers will be confronted with a simple choice: choose bare-metal Intel systems for production HANA and use VMware for only non-prod servers which do not require the same stack as production such as development or sandbox or choose IBM Power Systems and fully exploit the server consolidation capabilities it offers which continues the journey that most customers have been on since the early 2000s of improved datacenter efficiency using virtualized infrastructure.

TDI Phase 5 – SAPS based sizing bringing better TCO to new and existing Power Systems customers

SAP made a fundamental and incredibly important announcement this week at SAP TechEd in Las Vegas: TDI Phase 5 – SAPS based sizing for HANA workloads. Since its debut, HANA has been sized based on a strict memory to core ratio determined by SAP based on workloads and platform characteristics, e.g. generation of processor, MHz, interconnect technology, etc. This might have made some sense in the early days when much was not known about the loads that customers were likely to experience and SAP still had high hopes for enabling all customer employees to become knowledge workers with direct access to analytics. Over time, with very rare exception, it turned out that CPU loads were far lower than the ratios might have predicted.

I have only run into one customer in the past two years that was able to drive a high utilization of their HANA systems and that was a customer running an x86 BW implementation with an impressively high number of concurrent users at one point in their month. Most customers have experienced just the opposite, consistently low utilization regardless of technology.

For many customers, especially those running x86 systems, this has not been an issue. First, it is not a significant departure from what many have experienced for years, even those running VMware. Second, to compensate for relatively low memory and socket-to-socket bandwidth combined with high latency interconnects, many x86 systems work best with an excess of CPU. Third, many x86 vendors have focused on HANA appliances which are rarely utilized with virtualization and are therefore often single instance systems.

IBM Power Systems customers, by comparison, have been almost universal in their concern about poor utilization. These customers have historically driven high utilization, often over 65%. Power has up to 5 times the memory bandwidth per socket of x86 systems (without compromising reliability) and very wide and parallel interconnect paths with very low latencies. HANA has never been offered as an appliance on Power Systems, instead being offered only using a Tailored Datacenter Infrastructure (TDI) approach. As a result, customers view on-premise Power Systems as a sort of utility, i.e. that they should be able to use them as they see fit and drive as much workload through them as possible while maintaining the Service Level Agreements (SLA) that their end users require. The idea of running a system at 5%, or even 25%, utilization is almost an affront to these customers, but that is what they have experienced with the memory to core restrictions previously in place.

IBM’s virtualization solution, PowerVM, enabled SAP customers to run multiple production workloads (up to 8 on the largest systems) or a mix of production workloads (up to 7) with a shared pool of CPU resources within which an almost unlimited mix of VMs could run including non-prod HANA, application servers, as well as non-SAP and even other OS workloads, e.g. AIX and IBM i. In this mixed mode, some of the excess CPU resource not used by the production workloads could be utilized by the shared-pool workloads. This helped drive up utilization somewhat, but not enough for many.

These customers would like to do what they have historically done. They would like to negotiate response time agreements with their end user departments then size their systems to meet those agreements and resize if they need more capacity or end up with too much capacity.

The newly released TDI Overview document http://bit.ly/2fLRFPb describes the new methodology: “SAP HANA quicksizer and SAP HANA sizing reports have been enhanced to provide separate CPU and RAM sizing results in SAPS”. I was able to verify Quicksizer showing SAPS, but not the sizing reports. An SAP expert I ran into at TechEd suggested that getting the sizing reports to determine SAPS would be a tall order since they would have to include a database of SAPS capacity for every system on the market as well as number of cores and MHz for each one. (In a separate blog post, I will share how IBM can help customers to calculate utilized SAPS on existing systems). Customers are instructed to work with their hardware partner to determine the number of cores required based on the SAPS projected above. The document goes on to state: “The resulting HANA TDI configurations will extend the choice of HANA system sizes; and customers with less CPU intensive workloads may have bigger main memory capacity compared to SAP HANA appliance based solutions using fixed core to memory sizing approach (that’s more geared towards delivery of optimal performance for any type of a workload).”

Using a SAPS based methodology will be a good start and may result in fewer cores required for the same workload as would have been previously calculated based on a memory/core ratio. Customers that wish to allocate more of less CPU to those workloads will now have this option meaning that even more significant reduction of CPU may be possible. This will likely result in much more efficient use of CPU resources, more capacity available to other workloads and/or the ability to size systems with less resources to drive down the cost of those systems. Either way helps drive much better TCO by reducing numbers and sizes of systems with the associated datacenter and personnel costs.

Existing Power customers will undoubtedly be delighted by this news. Those customers will be able to start experimenting with different core allocations and most will find they are able to decrease their current HANA VM sizes substantially. With the resources no longer required to support production, other workloads currently implemented on external systems may be consolidated to the newly, right sized, system. Application servers, central services, Hadoop, HPC, AI, etc. are candidates to be consolidated in this way.

Here is a very simple example: A hypothetical customer has two production workloads, BW/4HANA and S/4HANA which require 4TB and 3TB respectively. For each, HA is required as is Dev/Test, Sandbox and QA. Prior to TDI Phase 5, using Power Systems, the 4TB BW system would require roughly 82-cores due to the 50GB/core ratio and the S/4 workload would require roughly 33 cores due to the 96GB/core ratio. Including HA and non-prod, the systems might look something like:

Note the relatively small number of cores available in the shared pool (might be less than optimal) and the total number of cores in the system. Some customers may have elected to increase to an even larger system or utilize additional systems as a result. As this stood, this was already a pretty compelling TCO and consolidation story to customers.

With SAPS based sizing, the BW workload may require only 70 cores and S/4 21 cores (both are guesses based on early sizing examples and proper analysis of the SAP sizing reports and per core SAPS ratings of servers is required to determine actual core requirements). The resulting architecture could look like:

Note the smaller core count in each system. By switching to this methodology, lower cost CPU sockets may be employed and processor activation costs decreased by 24 cores per system. But the number of cores in the shared pool remains the same, so still could be improved a bit.

During a landscape session at SAP TechEd in Las Vegas, an SAP expert stated that customers will be responsible for performance and CPU allocation will not be enforced by SAP through HWCCT as had been the case in the past. This means that customers will be able to determine the number of cores to allocate to their various instances. It is conceivable that some customers will find that instead of the 70 cores in the above example, 60, 50 or fewer cores may be required for BW with decreased requirements for S/4HANA as well. Using this approach, a customer choosing this more hypothetical approach might see the following:

Note how the number of cores in the shared pool have increased substantially allowing for more workloads to be consolidated to these systems, further decreasing costs by eliminating those external systems as well as being able to consolidate more SAN and Network cards, decreasing computer room space and reducing energy/cooling requirements.

A reasonable question is whether these same savings would accrue to an x86 implementation. The answer is not necessarily. Yes, fewer cores would also be required, but to take advantage of a similar type of consolidation, VMware must be employed. And if VMware is used, then a host of caveats must be taken into consideration. 1) overhead, reportedly 12% or more, must be added to the capacity requirements. 2) I/O throughput must be tested to ensure load times, log writes, savepoints, snapshots and backup speeds which are acceptable to the business. 3) limits must be understood, e.g. max memory in a VM is 4TB which means that BW cannot grow by even 1KB. 4) Socket isolation is required as SAP does not permit the sharing of a socket in a HANA production/VMware environment meaning that reducing core requirements may not result in fewer sockets, i.e. this may not eliminate underutilized cores in an Intel/VMware system. 5) Non-prod workloads can’t take advantage of capacity not used by production for several reasons not the least of which is that SAP does not permit sharing of sockets between VM prod and non-prod instances not to mention the reluctance of many customer to mix prod and non-prod using a software hypervisor such as VMware even if SAP permitted this. Bottom line is that most customers, through an abundance of caution, or actual experience with VMware, choose to place production on bare-metal and non-prod, which does not require the same stack as prod, on VMware. Workloads which do require the same stack as prod, e.g. QA, also are usually placed on bare-metal. After closer evaluation, this means that TDI Phase 5 will have limited benefits to x86 customers.

This announcement is the equivalent of finally being allowed to use 5th gear on your car after having been limited to only 4 for a long time. HANA on IBM Power Systems already had the fastest adoption in recent SAP history with roughly 950 customers selecting HANA on Power in just 2 years. TDI Phase 5 uniquely benefits Power Systems customers which will continue the acceleration of HANA on Power. Those individuals that recommended or made decisions to select HANA on Power will look like geniuses to their CFOs as they will now get the equivalent of new systems capacity at no cost.

What should you do when your LoBs say they are not ready for S/4HANA – part 2, Why choice of Infrastructure matters

Conversions to S/4HANA usually take place over several months and involve dozens to hundreds of steps. With proper care and planning, these projects can run on time, within budget and result in a final Go-Live that is smooth and occurs within an acceptable outage window. Alternately, horror stories abound of projects delayed, errors made, outages far beyond what was considered acceptable by the business, etc. The choice of infrastructure to support a conversion may the last thing on anyone’s mind, but it can have a dramatic impact on achieving a successful outcome.

A conversion includes running many pre-checks[i] which can run for quite a while[ii] which implies that they can drive CPU utilization to high levels for a significant duration and impact other running workloads. As a result, consultants routinely recommend that you make a copy of any system, especially production, against which you will run these pre-checks. SAP recommends that you run these pre-checks against every system to be converted, e.g. Development, Test, Sandbox, QA, etc. If they are being used for on-going work, it may be advisable to also make a copy of them and run those copies on other systems or within virtual machines which can be limited to avoid causing performance issues with other co-resident virtual machines.

In order to find issues and correct them, conversion efforts usually involve a phased approach with multiple conversions of support systems, e.g. Dev, Test, Sandbox, QA using a tool such as SAP’s Systems Update Manager with the Database Migration Option (SUM w/DMO). One of the goals of each run is to figure out how long it will take and what actions need to be taken to ensure the Go-Live production conversion completes within the required outage window, including any post-processing, performance tuning, validation and backups.

In an attempt to keep expenses low, many customers will choose to use existing systems or VMs or systems in addition to a new “target” system or systems if HA is to be tested. This means that the customer’s network will likely be used in support of these connections. Taken together, the use of shared infrastructure components may come into opposition with events among the shared components which can impacts these tests and activities. For example, if a VM is used but not enough CPU or network bandwidth is provided, the duration of the test may extend well beyond what is planned meaning more cost for per-hour consulting and may not provide the insight into what needs to be fixed and how long the actual migration may take. How about if you have plenty of CPU capacity or even a dedicated system, but the backup group decides to initiate a large database backup at the same time and on the same network as your migration test is using. Or maybe, you decide to run a test at a time that another group, e.g. operations, needs to test something that impacts it or when new equipment or firmware is being installed and modifications to shared infrastructure are occurring, etc. Of course, you can have good change management and carefully arrange when your conversion tests will occur which means that you may have restricted windows of opportunity at times that are not always convenient for your team.

Let’s not forget that the application/database conversion is only one part of a successful conversion. Functional validation tests are often required which could overwhelm limited infrastructure or take it away from parallel conversion tasks. Other easily overlooked but critical tasks include ensuring all necessary interfaces work; that third party middleware and applications install and operate correctly; that backups can be taken and recovered; that HA systems are tested with acceptable RPO and RTO; that DR is set up and running properly also with acceptable RPO and RTO. And since this will be a new application suite with different business processes and a brand new Fiori interface, training most likely will be required as well.

So, how can the choice of infrastructure make a difference to this almost overwhelming set of issues and requirements? It comes down to flexibility. Infrastructure which is built on virtualization allows many of these challenges to be easily addressed. I will use an existing IBM Power Systems customer running Oracle, DB2 or Sybase to demonstrate how this would work.

The first issue dealt with running pre-checks on existing systems. If those existing systems are Power Systems and enough excess capacity is available, PowerVM, the IBM Virtualization Hypervisor, allows a VM to be started with an exact copy of production, passed through normal post-processing such as BDLS to create a non-production copy or cloned and placed behind a network firewall. This VM could be fenced off logically and throttled such that production running on the same system would always be given preference for cpu resources. By comparison, a similar database located on an x86 system would likely not be able to use this process as the database is usually running on bare-metal systems for which no VM can be created.

Alternately, for Power Systems, the exact same process could be utilized to carve out a VM on a new HANA target system and this is where the real value starts to emerge. Once a copy or clone is available on the target HANA on Power system, as much capacity can be allocated to the various pre-checks and related tasks as needed without any concern for the impact on production or the need to throttle these processes thereby optimizing the duration of these tasks. On the same system, HANA target VMs may be created. As mock conversions take place, an internal virtual network may be utilized. Not only is such a network faster by a factor of 2 or more, but it is dedicated to this single purpose and completely unaffected by anything else going on within the datacenter network. No coordination is required beyond the conversion team which means that there is no externally imposed delay to begin a test or constraints on how long such a test may take or, for that matter, how many times such a test may be run.

The story only gets better. Remember, SAP suggests you run these pre-checks on all non-prod landscapes. With PowerVM, you may fire up any number of different copy/clone VMs and/or HANA VMs. This means that as you move from one phase to the next, from one instance to the next, from conversion to production, run a static validation environment while other tasks continue, conduct training classes, run many different phases for different projects at the same time, PowerVM enables the system to respond to your changing requirements. This helps avoid the need to purchase extra interim systems and buy when needed, not significantly ahead of time due to the inflexibility of other platforms. You can even simulate an HA environment to allow you to test your HA strategy without needing a second system, up to the physical limits of the system, of course. This is where a tool like SAP’s TDMS, Test Data Migration Server, might come in very handy.

And when it comes time for the actual Go-Live conversion, the running production database VM may be moved live from the “old” system, without any downtime, to the “new” system and the migration may now proceed using the virtual, in-memory network at the fastest possible speed and with all external factors removed. Of course, if the “old” system is based on POWER8, it may then be used/upgraded for other HANA purposes. Prior Power Systems as well as current generation POWER8 systems can be used for a wide variety of other purposes, both SAP and those that are not.

Bottom line: The choice of infrastructure can help you eliminate external influences that cause delays and complications to your conversion project, optimize your spend on infrastructure, and deliver the best possible throughput and lowest outage window when it comes to the Go-Live cut-over. If complete control over your conversion timeline was not enough, avoidance of delays keeps costs for non-fixed cost resources to a minimum. For any SAP customer not using Power Systems today, this flexibility can provide enormous benefits, however the process of moving between systems would be somewhat different. For any existing Power Systems customer, this flexibility makes a move to HANA on Power Systems almost a no-brainer, especially since IBM has so effectively removed TCA as a barrier to adoption.

[i] https://blogs.sap.com/2017/01/20/system-conversion-to-s4hana-1610-part-2-pre-checks/

[ii] https://uacp.hana.ondemand.com/http.svc/rc/PRODUCTION/pdfe68bfa55e988410ee10000000a441470/1511%20001/en-US/CONV_OP1511_FPS01.pdf page 19

Update – SAP HANA support for VMware, IBM Power Systems and new customer testimonials

The week before Sapphire, SAP unveiled a number of significant enhancements. VMware 6.0 is now supported for a production VM (notice the lack of a plural); more on that below. Hybris Commerce, a number of apps surrounding SoH and S/4HANA is now supported on IBM Power Systems. Yes, you read that right. The Holy Grail of SAP, S/4 or more specifically, 1511 FPS 02, is now supported on HoP. Details, as always, can be found in SAP note: 2218464 – Supported products when running SAP HANA on IBM Power Systems . The importance of this announcement, or should I say non-announcement as you had to be watching the above SAP note as I do on almost a daily basis because it changes so often, was the only place where this was mentioned. This is not a dig at SAP as this is their characteristic way of releasing updates on availability to previously suggested intentions and is consistent as this was how they non-announced VMware 6.0 support as well. Hasso Plattner, various SAP executives and employees, in Sapphire keynotes and other sessions, mentioned support for IBM Power Systems in almost a nonchalant manner, clearly demonstrating that HANA on Power has moved from being a niche product to mainstream.

Also, of note, Pfizer delivered an ASUG session during Sapphire including significant discussion about their use of IBM Power Systems. I was particularly struck by how Joe Caruso, Director, ERP Technical Architecture at Pfizer described how Pfizer tested a large BW environment on both a single scale-up Power System with 50 cores and 5TB of memory and on a 6-node x86 scale-out cluster (tested on two different vendors, not mentioned in this session but probably not critical as their performance differences were negligible), 60-cores on each node with 1 master node and 4 worker nodes plus a not-standby. After appropriate tuning, including utilizing table partitioning on all systems, including Power, the results were pretty astounding; both environments performed almost identically, executing Pfizer’s sample set, composed of 75+ queries, in 5.7 seconds, an impressive 6 to 1 performance advantage on a per core basis, not including the hot-standby node. What makes this incredible is that the official BW-EML benchmark only shows an advantage of 1.8 to 1 vs. the best of breed x86 competitor and another set of BW-EML benchmark results published by another x86 competitor shows scale-out to be only 15% slower than scale-up. For anyone that has studied the Power architecture, especially POWER8, you probably know that it has intrinsics that suggest it should handle mixed, complex and very large workloads far better than x86, but it takes a customer executing against their real data with their own queries to show what this platform can really do. Consider benchmarks to be the rough equivalent of a NASCAR race car, with the best of engineering, mechanics, analytics, etc, vs. customer workloads which, in this analogy, involves transporting varied precious cargo in traffic, on the highway and on sub-par road conditions. Pfizer decided that the performance demonstrated in this PoC was compelling enough to substantiate their decision to implement using IBM Power Systems with an expected go-live later this year. Also, of interest, Pfizer evaluated the reliability characteristics of Power, based in part on their use of Power Systems for conventional database systems over the past few years, and decided that a hot-standby node for Power was unnecessary, further improving the overall TCO for their BW project. I had not previously considered this option, but it makes sense considering the rarity of Power Systems to be unable to handle predictable, or even unpredictable faults, without interrupting running workloads. Add to this, for many, the loss of analytical environments is unlikely to result in significant economic loss.

Also in a Sapphire session, Steve Parker, Director Application Development, Kennametal, shared a very interesting story about their journey to HANA on Power. Though they encountered quite a few challenges along the way, not the least being that they started down the path to Suite on HANA and S/4HANA prior to it being officially supported by SAP, they found the Power platform to be highly stable and its flexibility was of critical importance to them. Very impressively, they reduced response times compared to their old database, Oracle, by 60% and reduced the run-time of a critical daily report from 4.5 hours to just 45 minutes, an 83% improvement and month end batch now completes 33% faster than before. Kennametal was kind enough to participate in a video, available on YouTube at: https://www.youtube.com/watch?v=8sHDBFTBhuk as well as a write up on their experience at: http://www-03.ibm.com/software/businesscasestudies/us/en/gicss67sap?synkey=W626308J29266Y50.

As I mentioned earlier, SAP snuck in a non-announcement about VMware and how a single production VM is now supported with VMware 6.0 in the week prior to Sapphire. SAP note 2315348 – describes how a customer may support a single SAP HANA VM on VMware vSphere 6 in production. One might reasonably question why anyone would want to do this. I will withhold any observations on the mind set of such an individual and instead focus on what is, and is not, possible with this support. What is not possible: the ability to run multiple production VMs on a system or to mix production and non-prod. What is possible: the ability to utilize up to 128 virtual processors and 4TB of memory for a production VM, utilize vMotion and DRS for that VM and to deliver DRAMATICALLY worse performance than would be possible with a bare-metal 4TB system. Why? Because 128 vps with Hyperthreading enabled (which just about everyone does) utilizes 64 cores. To support 6TB today, a bare-metal Haswell-EX system with 144 cores is required. If we extrapolate that requirement to 4TB, 96 cores would be required. Remember, SAP previously explained a minimum overhead of 12% was observed with a VM vs. bare-metal, i.e. those 64 cores under VMware 6.0 would operate, at best, like 56 cores on bare-metal or 42% less capacity than required for bare-metal. Add to this the fact that you can’t recover any capacity left over on that system and you are left with a hobbled HANA VM and lots of leftover CPU resources. So, vMotion is the only thing of real value to be gained? Isn’t HANA System Replication and a controlled failover a much more viable way of moving from one system to another? Even if vMotion might be preferred, does vMotion move memory pages from source to target system using the EXACT same layout as was implemented on the source system? I suspect the answer is no as vMotion is designed to work even if other VMs are currently running on the target system, i.e. it will fill memory pages based on availability, not based on location. As a result, this would mean that all of the wonderful CPU/memory affinity that HANA so carefully established on the source system would likely be lost with a potentially huge impact on performance.

So, to summarize, this new VMware 6.0 support promises bad performance, incredibly poor utilization in return for the potential to not use System Replication and suffer even more performance degradation upon movement of a VM from one system to another using vMotion. Sounds awesome but now I understand why no one at the VMware booth at Sapphire was popping Champagne or in a celebratory mood. (Ok, I just made that up as I did not exactly sit and stare at their booth.)

Certified vs. supported HANA solutions; what’s the difference and why should you care

There seems to be a lot of confusion about the terms “Certified” and “Supported” in the HANA context. Those are not qualitative terms but more of a definition of how solutions are put together and delivered. SAP recognized that HANA was such a new technology, back in 2011, and had so many variables which could impact performance and support, that they asked technology vendors to design appliances which SAP could review, test and ensure that all performance characteristics met SAP’s KPIs. Furthermore, with a comprehensive understanding of what was included in an appliance, SAP could offer a one-stop-shop approach to support, i.e. if a customer has a problem with a “Certified” appliance, just call SAP and they will manage the problem and work with the technology vendor to determine where the problem is, how to fix it and drive it to full resolution.

Sounds perfect, right? Yes … as long as you don’t need to make any modifications as business needs change. Yes … as long as you don’t mind the system running at low utilization most of the time. Yes … as long as the systems, storage and interconnects that are included in the “certified” solution match the characteristics that you consider important, are compatible with your IT infrastructure and allow you to use the management tools of your choice.

So, what is the option? SAP introduced TDI, the Tailored Datacenter Integration approach. It allows customers to put together HANA environments in a more flexible manner using a customer defined set of components (with some restrictions) which meet SAP’s performance KPIs. What is the downside? Meeting those KPIs and problem resolution are customer responsibilities. Sounds daunting, but it is not. Fortunately, SAP doesn’t just say, go forward and put anything together that you want. Instead, they restrict servers and storage subsystems to those for which internal or external performance tests have been completed to SAP standards. This allows reasonable ratios to be derived, e.g. the memory to core ratio for various types of systems and HANA implementation choices. Some restrictions do apply, for example Intel Haswell-EX environments must utilize systems which have been approved for use in appliances and Haswell-EP and IBM Power Systems environments must use systems listed on the appropriate “supported” tabs of the official HANA infrastructure support guide.[i] Likewise, Certified Enterprise storage subsystems are also listed, but this does not rule out the use of internal drives for TDI solutions.

Any HANA solution, whether an appliance or a TDI defined system, is equally capable of handling the HANA workload which falls within the maximums that SAP has identified. SAP will support HANA on any of the above. As to the full solution support, as mentioned previously, this is a customer responsibility. Fortunately, vendors, such as IBM, offer a one-stop-shop support package. IBM calls its package Custom Technical Support (US) or Total Solution Service (outside of US). Similar to the way that SAP supports an appliance, with this offering, a customer need call only one number for support. IBM’s support center will then work with the customer to do problem determination and problem source identification. When problems are determined to be caused by IBM, SAP or SUSE products, warm transfers are made to those groups. The IBM support center stays engaged even after the warm transfer occurs to ensure the problem is resolved and delivered to the customer. In addition, customers may benefit from optional proactive services (on-site or remote) to analyze the system in order to receive recommendations to keep the system up to date and/or to perform necessary OS, firmware or hardware updates and upgrades. With these proactive support offerings, customers can ensure that the HANA systems are maintained in line with SAP’s planned release calendar and are fully prepared for future upgrades.

There are a couple of caveats however. Since TDI permits the use of the customer’s preferred network and storage vendors, these sorts of support offerings typically encompass only the vendors’ products that are within the scope of the warm transfer agreements of each offering vendor. As a result, a problem with a network switch or a third-party storage subsystem for which the proactive support vendor does not have a warm transfer support agreement would still be the responsibility of the customer.

So, should a customer choose a “certified” solution or a TDI supported solution? The answer depends on the scope of the HANA implementation, the customer’s existing standards, skills and desire to utilize them, the flexibility with resource utilization and instance placement desired and, of course, cost.

Scope – If HANA is used as a side-car, a small BW environment, or perhaps for Business One, an appliance can be a viable option, especially if the HANA solution will be located in a setting for which local skilled personnel are not readily available. If, however, the HANA environment is more complex, e.g. BW scale-out, SoH, S/4, large, etc, and located in a company’s main data centers with properly skilled individuals, then a TDI supported approach may be more desirable.

Standards – Many customers have made large investments in network infrastructure, storage subsystems and the tools and skills necessary to manage them. Appliances that include components which are not part of those standards not only bring in new devices that are unfamiliar to the support staff, but may be largely invisible to the tools currently in use.

Flexibility – Appliances are well defined, single purpose devices. That definition includes a fixed amount of memory, CPU resources, I/O adapters, SSD and/or HDD devices. Simple to order, inflexible to change. If a different amount of any of the above resources is desired, in theory, any change permitted by the offering vendor results in the device moving from a SAP supported appliance to a TDI supported configuration instantly requiring the customer to accept responsibility for everything just as quickly. By comparison, TDI supported solutions start out as a customer responsibility meaning it has been tailored around the customer’s standards and can be modified as desired at any time. All that is required for support is to run SAP’s HWCCT (Hardware Configuration Check Tool) to ensure that the resulting configuration still meets all SAP KPIs. As a result, if a customer desires to virtualize, mixing multiple production and non-production or even non-SAP workloads (when supported by the chosen virtualization solution, see my blog post on VMware and HANA recently published), a TDI solution, vendor and technology dependent, supports this by definition; an appliance does not. Likewise, a change in capacity, e.g. physical addition/removal of components, logical change of capacity, often called Capacity on Demand and VM resizing, are fully supported with TDI, not with appliances. As a result, once a limit is reached with an appliance, either a scale-out approach much be utilized, in the case of analytics that support scale-out, or the appliance must be decommissioned and replaced with a larger one. A TDI solution will available additional capacity or the ability to add additional gives the customer the ability to upgrade in place, thereby providing greater investment protection.

Cost – An appliance trades simplicity with potentially higher TCO as it is designed to meet the above mentioned KPIs without a comprehensive understanding of what workload will be handled by said appliance, often resulting in dramatic over-capacity, e.g. uses dozens of HDDs to meet disk throughput requirements. By comparison, customers with existing enterprise storage subsystems, may need only a few additional SSD and/or HDDs to meet those KPIs with limited incremental cost to infrastructure, environmentals and support. Likewise, the ability to use fewer, potentially larger systems with the ability to co-reside production, non-prod, app servers, non-SAP VMs, HA, etc., can result in significant reductions of systems footprints, power, cooling, management and associated costs.

IBM Power Systems has chosen to take the TDI-only approach as a direct result of feedback received from customers, especially enterprise customers, that are used to managing their own systems, have available skills, have prevalent IT standards, etc. HANA on Power is based on virtualization, so is designed, by default, to be a TDI based solution. HANA on Power allows for one or many HANA instances, a mixture of prod and potentially non-prod or non-HANA to share the system. HA and DR can be mixed with other prod and non-prod instances.

I am often asked about t-shirt sizes, but this is a clear indication that the individual asking the question has a mindset based on appliance sizing. TDI architecture involves landscape sizing and encompasses all of the various components required to support the HANA and non-HANA systems, so a t-shirt sizing would end up being completely misleading unless a customer only needs a single system, no HA, no DR, no non-prod, no app servers, etc.

[i] http://global.sap.com/community/ebook/2014-09-02-hana-hardware/enEN/appliances.html

HoP keeps Hopping Forward – GA Announcement and New IBM Solution Editions for SAP HANA

Almost two years ago, I speculated about the potential value of a HANA on Power solution. In June, 2014, SAP announced a Test and Evaluation program for Scale-up BW HANA on Power. That program shifted into high gear in October, 2014 and roughly 10 customers got to start kicking the tires on this solution. Those customers had the opportunity to push HANA to its very limits. Remember, where Intel systems have 2 threads per core, POWER8 has up to 8 threads per core. Where the maximum size of most conventional Intel systems can scale to 240 threads, the IBM POWER E870 can scale to an impressive 640 threads and the E880 system can scale to 1536 threads. This means that IBM is able to provide an invaluable test bed for system scalability to SAP. As SAP’s largest customers move toward Suite “4” HANA (S4HANA), they need to have confidence in the scalability of HANA and IBM is leading the way in proving this capability.

A Ramp-up program began in March with approximately 25 customers around the world being given the opportunity to have access to GA level code and start to build out BW POC and production environments. This brings us forward to the announcement by SAP this week @ SapphireNow in Orlando of the GA of HANA on Power. SAP announced that customers will have the option of choosing Power for their BW HANA platform, initially to be used in a scale-up mode and plans to support scale-out BW, Suite on HANA and the full complement of side-car applications over the next 12 to 18 months.

Even the most loyal IBM customer knows the comparative value of other BW HANA solutions already available on the market. To this end, IBM announced new “solution editions”. A solution edition is simply a packaging of components, often with special pricing, to match expectations of the industry for a specific type of solution. “Sounds like an appliance to me” says the guy with a Monty Python type of accent and intonation (no, I am not making fun of the English and am, in fact, a huge fan of Cleese and company). True, if one were to look only at the headline and ignore the details. In reality, IBM is looking toward these as starting points, not end points and most certainly not as any sort of implied limitation. Remember, IBM Power Systems are based on the concept of Logical Partitions using Power Virtualization Manager (PVM). As a result, a Power “box” is simply that, a physical container within which one or multiple logical systems reside and the size of each “system” is completely arbitrary based on customer requirements.

So, a “solution edition” simply defines a base configuration designed to be price competitive with the industry while allowing customers to flexibly define “systems” within it to meet their specific requirements and add incremental capability above that minimum as is appropriate for their business needs. While a conventional x86 system might have 1TB of memory to support a system that requires 768GB, leaving the rest unutilized, a Power System provides for that 768GB system and allows the rest of the memory to be allocated to other virtual machines. Likewise, HANA is often characterized by periods of 100% utilization, in support of instantaneous response time demanded of ad-hoc queries, followed by unfathomably long periods (in computer terms) of little to no activity. Many customers might consider this to be a waste of valuable computing resource and look forward to being able to harness this for the myriad of other business purposes that their businesses actually depend on. This is the promise of Power. Put another way, the appliance model results in islands of automation like we saw in the 1990s where Power continues the model of server consolidation and virtualization that has become the modus operandi of the 2000s.

But, says the pitchman for a made for TV product, if you call right now, we will double the offer. If you believe that, then you are probably not reading my blog. If a product was that good, they would not have to give you more for the same price. Power, on the other hand, takes a different approach. Where conventional BW HANA systems offer a maximum size of 2TB for a single node, Power has no such inherent limitations. To handle larger sizes, conventional systems must “scale-out” with a variety of techniques, potentially significantly increased costs and complexity. Power offers the potential to simply “scale-up”. Future IBM Power solutions may be able to scale-up to 4TB, 8TB or even 16TB. In a recent post to this blog, I explained that to match the built in redundancy for mission critical reliability of memory in Power, x86 systems would require memory mirroring at twice the amount of memory with an associated increase in CPU and reduction in memory bandwidth for conventional x86 systems. SAP is pushing the concepts of MCOS, MCOD and multi-tenancy, meaning that customers are likely to have even more of their workloads consolidated on fewer systems in the future. This will result in demand for very large scaling systems with unprecedented levels of availability. Only IBM is in position to deliver systems that meet this requirement in the near future.

Details on these solution editions can be found at http://www-03.ibm.com/systems/power/hardware/sod.html

In the last few days, IBM and other organizations have published information about the solution editions and the value of HANA on Power. Here are some sites worth visiting:

Press Release: IBM Unveils Power Systems Solutions to Support SAP HANA

Video: The Next Chapter in IBM and SAP Innovation: Doug Balog announces SAP HANA on POWER8

Case study: Technische Universität München offers fast, simple and smart hosting services with SAP and IBM

Video: Technische Universität München meet customer expectations with SAP HANA on IBM POWER8

Analyst paper: IBM: Empowering SAP HANA Customers and Use Cases

Article: HANA On Power Marches Toward GA

Selected SAP Press

ComputerWorld: IBM’s new Power Systems servers are just made for SAP Hana

eWEEK, IBM Launches Power Systems for SAP HANA

ExecutiveBiz, IBM Launches Power Systems Servers for SAP Hana Database System; Doug Balog Comments

TechEYE.net, IBM and SAP work together again

ZDNet: IBM challenges Intel for space on SAP HANA

Data Center Knowledge: IBM Stakes POWER8 Claim to SAP Hana Hardware Market

Enterprise Times: IBM gives SAP HANA a POWER8 boost

The Platform: IBM Scales Up Power8 Iron, Targets In-Memory

Also a planning guide for HANA on Power has been published at http://www-03.ibm.com/support/techdocs/atsmastr.nsf/WebIndex/WP102502 .

Is VMware ready for HANA production?

Virtualizing SAP HANA with VMware in productive environments is not supported at this time, but according to Arne Arnold with SAP, based on his blog post of Nov 5, http://www.saphana.com/community/blogs/blog/2013/11/05/just-a-matter-of-time-sap-hana-virtualized-in-production, they are working hard in that direction.

Clearly memory can be assigned to individual partitions with VMware and to a more limited extent, CPU resources may also be assigned although this may be a bit more limited in its effectiveness. The issues that SAP will have to overcome, however, are inherent limitations in scalability of VMware partitions, I/O latency and potential contention between partitions for CPU resources.

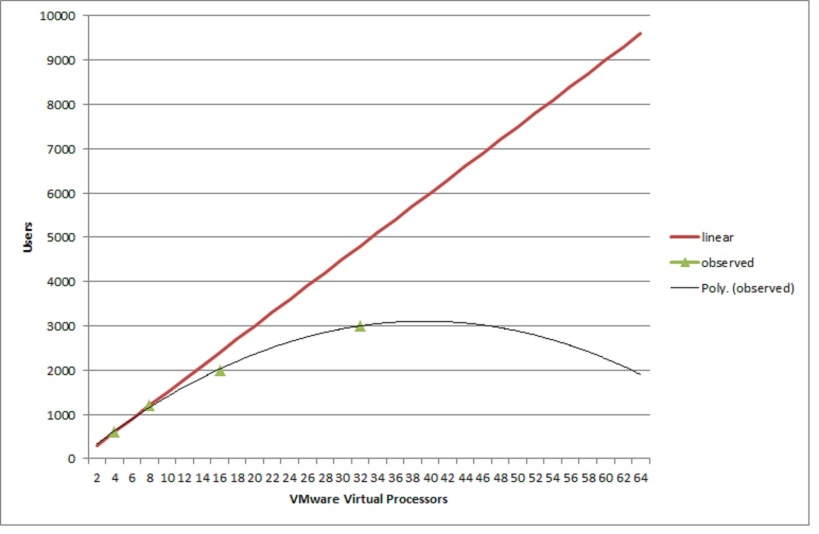

As I discussed in my blog post late last year, https://saponpower.wordpress.com/2012/10/23/sap-performance-report-sponsored-by-hp-intel-and-vmware-shows-startling-results/, VMware 5.0 was proven, by VMware, HP and Intel, to have severe performance limitations and scalability constraints. In the lab test, a single partition achieved only 62.5% scalability, overall, but what is more startling is the scalability between each measured interval. From 4 to 8 threads, they were able to double the number of users, thereby demonstrating 100% scalability, which is excellent. From 8 to 16 threads, they were only about to handle 66.7% more users despite doubling the number of threads. From 16 to 32 threads, the number of users supported increased only 50%. Since the time of the study being published, VMware has released vSphere 5.1 with an architected limit of 64 threads per partition and 5.5 with an architected limited of 128 threads per partitions. Notice my careful wording of architected limit not the official VMware wording, of “scalability”. Scaling implies that with each additional thread, additional work can be accomplished. Linear scaling implies that each time you double the number of threads, you can accomplish twice the amount of work. Clearly, vSphere 5.0 was unable to attain even close to linear scaling. But now with the increased number of threads supported, can they achieve more work? Unfortunately, there are no SAP proof points to answer this question. All that we can do is to extrapolate the results from their earlier published results assuming the only change was the limitation in the number of architected threads. If we use the straight forward Microsoft Excel “trendline” function to project results using a Polynomial with an order of 2, (no, it has been way to long since I took statistics in college to explain what this means but I trust Microsoft (lol)) we see that a VMware partition is unlikely to ever achieve much more throughput, without a major change in the VMware kernel, than it achieved with only 32 threads. Here is a graph that I was able to create in Excel using the data points from the above white paper.

Remember, at 32 threads, with Intel Hyperthreading, this represents only 16 cores. As a 1TB BW HANA system requires 80 cores, it is rather difficult to imagine how a VMware partition could ever handle this sort of workload much less how it would respond to larger workloads. Remember, 1TB = 512GB of data space which, at a 4 to 1 compression ratio, equal 2TB of data. VMware starts to look more and more inadequate as data size increases.

And if a customer was misled enough by VMware or one of their resellers, they might think that using VMware in non-prod was a good idea. Has SAP or a reputable consultant ever recommended using use one architecture and stack in non-prod and a completely different one in prod?

So, in which case would virtualizing HANA be a good idea? As far as I can tell, only if you are dealing with very small HANA databases. How small? Let’s do the math: assuming linear scalability (which we have already proven above is not even close to what VMware can achieve) 32 threads = 16 cores which is only 20% of the capacity of an 80 core system. 20% of 2TB = 400GB of uncompressed data. At the 62.5% scalability described above, this would diminish further to 250GB. There may be some side-car applications for which a large enterprise might replicate only 250GB of data, but do you really want to size for the absolute maximum throughput and have no room for growth other than chucking the entire system and moving to newer processor versions each time they come out? There might also be some very small customers which have data that can currently fit into this small a space, but once again, why architect for no growth and potentially failure? Remember, this was a discussion only about scalability, not response time. Is it likely that response time also degrades as VMware partitions increase in size? Silly me! I forgot to mention that the above white paper showed response time increasing from .2 seconds @ 4 threads to 1 second @ 32 threads a 400% increase in response time. Isn’t the goal of HANA to deliver improved performance? Kind of defeats the purpose if you virtualize it using VMware!

Is the SAP 2-tier benchmark a good predictor of database performance?

Answer: Not even close, especially for x86 systems. Sizings for x86 systems based on the 2-tier benchmark can be as much as 50% smaller for database only workloads as would be predicted by the 3-tier benchmark. Bottom line, I recommend that any database only sizings for x86 systems or partitions be at least doubled to ensure that enough capacity is available for the workload. At the same time, IBM Power Systems sizings are extremely conservative and have built in allowances for reality vs. hypothetical 2-tier benchmark based sizings. What follows is a somewhat technical and detailed analysis but this topic cannot, unfortunately, be boiled down into a simple set of assertions.

The details: The SAP Sales and Distribution (S&D) 2-tier benchmark is absolutely vital to SAP sizings as workloads are measured in SAPS (SAP Application Performance Standard)[i], a unit of measurement based on the 2-tier benchmark. The goal of this benchmark is to be hardware independent and useful for all types of workloads, but the reality of this benchmark is quite different. The capacity required for the database server portion of the workload is 7% to 9% of the total capacity with the remainder used by multiple instances of dialog/update servers and a message/enqueue server. This contrasts with the real world where the ratio of app to DB servers is more in the 4 to 1 range for transactional systems and 2 or 1 to 1 for BW. In other words, this benchmark is primarily an application server benchmark with a relatively small database server. Even if a particular system or database software delivered 50% higher performance for the DB server compared to what would be predicted by the 2-tier benchmark, the result on the 2-tier benchmark would only change by .07 * .5 = 3.5%.

How then is one supposed to size database servers when the SAP Quicksizer shows the capacity requirements based on 2-tier SAPS? A clue may be found by examining another, closely related SAP benchmark, the S&D 3-tier benchmark. The workload used in this benchmark is identical to that used in the 2-tier benchmark with the difference being that in the 2-tier benchmark, all instances of DB and App servers must be located within one operating system (OS) image where with the 3-tier benchmark, DB and App server instances may be distributed to multiple different OS images and servers. Unfortunately, the unit of measurement is still SAPS but this represents the total SAPS handled by all servers working together. Fortunately, 100% of the SAPS must be funneled through the database server, i.e. this SAPS measurement, which I will call DB SAPS, represents the maximum capacity of the DB server.

Now, we can compare different SAPS and DB SAPS results or sizing estimates for various systems to see how well 2-tier and 3-tier SAPS correlate with one another. Turns out, this is easier said than done as there are precious few 3-tier published results available compared to the hundreds of results published for the 2-tier benchmark. But, I would not be posting this blog entry if I did not find a way to accomplish this, would I? I first wanted to find two results on the 3-tier benchmark that achieved similar results. Fortunately, HP and IBM both published results within a month of one another back in 2008, with HP hitting 170,200 DB SAPS[ii] on a 16-core x86 system and IBM hitting 161,520 DB SAPS[iii] on a 4-core Power system.

While the stars did not line up precisely, it turns out that 2-tier results were published by both vendors just a few months earlier with HP achieving 17,550 SAPS[iv] on the same 16-core x86 system and IBM achieving 10,180 SAPS[v] on a 4-core and slightly higher MHz (4.7GHz or 12% faster than used in the 3-tier benchmark) Power system than the one in the 3-tier benchmark.

Notice that the HP 2-tier result is 72% higher than the IBM result using the faster IBM processor. Clearly, this lead would have even higher had IBM published a result on the slower processor. While SAP benchmark rules do not allow for estimates of slower to faster processors by vendors, even though I I am posting this as an individual not on behalf of IBM, I will err on the side of caution and give you only the formula, not the estimated result: 17,550 / (10,180 * 4.2 / 4.7) = the ratio of the published HP result to the projected slower IBM processor. At the same time, HP achieved only a 5.4% higher 3-tier result. How does one go from almost twice the performance to essentially tied? Easy answer, the IBM system was designed for database workloads with a whole boatload of attributes that go almost unused in application server workloads, e.g. extremely high I/O throughput and advanced cache coherency mechanisms.

One might point out that Intel has really turned up its game since 2008 with the introduction of Nehalem and Westmere chips and closed the gap, somewhat, against IBM’s Power Systems. There is some truth in that, but let’s take a look at a more recent result. In late 2011, HP published a 3-tier result of 175,320 DB SAPS[vi]. A direct comparison of old and new results show that the new result delivered 3% more performance than the old with 12 cores instead of 16 which works out to about 37% more performance per core. Admittedly, this is not completely correct as the old benchmark utilized SAP ECC 6.0 with ASCII and the new one used SAP ECC 6.0 EP4 with Unicode which is estimated to be a 28% higher resource workload, so in reality, this new result is closer to 76% more performance per core. By comparison, a slightly faster DL380 G7[vii], but otherwise almost identical system to the BL460c G7, delivered 112% more SAPS/core on the 2-tier benchmark compared to the BL680c G5 and almost 171% more per SAPS/core once the 28% factor mentioned above is taken into consideration. Once again, one would need to adjust these numbers based on differences in MHz and the formula for that would be: either of the above numbers * 3.06/3.33 = estimated SAPS/core.

After one does this math, one would find that improvement in 2-tier results was almost 3 times the improvement in 3-tier results further questioning whether the 2-tier benchmark has any relevance to the database tier. And just one more complicating factor; how vendors interpret SAP Quicksizer output. The Quicksizer conveniently breaks down the amount of workload required of both the DB and App tiers. Unfortunately, experience shows that this breakdown does not work in reality, so vendors can make modifications to the ratios based on their experience. Some, such as IBM, have found that DB loads are significantly higher than the Quicksizer estimates and have made sure that this tier is sized higher. Remember, while app servers can scale out horizontally, unless a parallel DB is used, the DB server cannot, so making sure that you don’t run out of capacity is essential. What happens when you compare the sizing from IBM to that of another vendor? That is hard to say since each can use whatever ratio they believe is correct. If you don’t know what ratio the different vendors use, you may be comparing apples and oranges.

Great! Now, what is a customer to do now that I have completely destroyed any illusion that database sizing based on 2-tier SAPS is even remotely close to reality?

One option is to say, “I have no clue” and simply add a fudge factor, perhaps 100%, to the database sizing. One could not be faulted for such a decision as there is no other simple answer. But, one could also not be certain that this sizing was correct. For example, how does I/O throughput fit into the equation. It is possible for a system to be able to handle a certain amount of processing but not be able to feed data in at the rate necessary to sustain that processing. Some virtualization managers, such as VMware have to transfer data first to the hypervisor and then to the partition or in the other direction to the disk subsystem. This causes additional latency and overhead and may be hard to estimate.

A better option is to start with IBM. IBM Power Systems is the “gold standard” for SAP open systems database hosting. A huge population of very large SAP customers, some of which have decided to utilize x86 systems for the app tier, use Power for the DB tier. This has allowed IBM to gain real world experience in how to size DB systems which has been incorporated into its sizing methodology. As a result, customers should feel a great deal of trust in the sizing that IBM delivers and once you have this sizing, you can work backwards into what an x86 system should require. Then you can compare this to the sizing delivered by the x86 vendor and have a good discussion about why there are differences. How do you work backwards? A fine question for which I will propose a methodology.

Ideally, IBM would have a 3-tier benchmark for a current system from which you could extrapolate, but that is not the case. Instead, you could extrapolate from the published result for the Power 550 mentioned above using IBM’s rperf, an internal estimate of relative performance for database intensive environments which is published externally. The IBM Power Systems Performance Report[viii] includes rperf ratings for current and past systems. If we multiply the size of the database system as estimated by the IBM ERP sizer by the ratio of per core performance of IBM and x86 systems, we should be able to estimate how much capacity is required on the x86 system. For simplicity, we will assume the sizer has determined that the database requires 10 of 16 @ IBM Power 740 3.55GHz cores. Here is the proposed formula:

Power 550 DB SAPS x 1/1.28 (old SAPS to new SAPS conversion) x rperf of 740 / rperf of 550

161,520 DB SAPS x 1/1.28 x 176.57 / 36.28 = estimated DB SAPS of 740 @ 16 cores

Then we can divide that above number by the number of cores to get a per core DB SAPS estimate. By the same token you can divide the published HP BL 460c G7 DB SAPS number by the number of cores. Then:

Estimated Power 740 DB SAPS/core / Estimated BL460c G7 DB SAPS/core = ratio to apply to sizing

The result is a ratio of 2.6, e.g. if a workload requires 10 IBM Power 740 3.55GHz cores, it would require 26 BL460c G7 cores. This contrasts to the per core estimated SAPS based on the 2-tier benchmark which suggests just that the Power 740 would have been just 1.4 time the performance per core. In other words, a 2-tier based sizing would suggest that the x86 system require just 14 cores where the 3-tier comparison suggests it actually needs almost twice that. This is, assuming the I/O throughput is sufficient. This also suggests that both systems have the same target utilization. In reality, where x86 systems are usually sized for no more than 65% utilization, Power System are routinely sized for up to 85% utilization.

If this workload was planned to run under VMware, the number of vcpus must be considered which is twice the number of cores, i.e. this workload would require 52 cores which is over the limit of 32 vcpu limit of VMware 5.0. Even when VMware can handle 64 vcpu, the overhead of VMware and its ability to sustain the high I/O of such a workload must be included in any sizing.

Of course, technology moves on and Intel is into its Gen8 processors. So, you may have to adjust what you believe to the effective throughput of the x86 system based on relative performance to the BL460c G7 above, but now, at least, you may have a frame of reference for doing the appropriate calculations. Clearly, we have shown that 2-tier is an unreliable benchmark by which to size database only systems or partitions and can easily be off by 100% for x86 systems.

[ii] 170,200 SAPS/34,000 users, HP ProLiant BL680c G5, 4 Processor/16 Core/16 Thread, E7340, 2.4 Ghz, Windows Server 2008 Enterprise Edition, SQL Server 2008, Certification # 2008003

[iii] 161,520 SAPS/32,000 users, IBM System p 550, 2 Processor/4 Core/8 Thread, POWER6, 4.2 Ghz , AIX 5.3, DB2 9.5, Certification # 2008001

[iv] 17,550 SAPS/3,500 users , HP ProLiant BL680c G5, 4 Processor/16 Core/16 Thread, E7340, 2.4 Ghz, Windows Server 2008 Enterprise Edition, SQL Server 2008, Certification # 2007055

[v] 10,180 SAPS/2,035 users, IBM System p 570, 2 Processor/4 Core/8 Thread, POWER6, 4.7 Ghz, AIX 6.1, Oracle 10G, Certification # 2007037

[vi] 175,320 SAPS/32,125 users, HP ProLiant BL460c G7, 2 Processor/12 Core/24 Thread, X5675, 3.06 Ghz, Windows Server 2008 R2 Enterprise on VMware ESX 5.0, SQL Server 2008, Certification # 2011044